Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Insurance is an underrated way to unlock secure AI progress.

Insurers are incentivized to truthfully quantify and track risks: if they overstate risks, they get outcompeted; if they understate risks, their payouts bankrupt them.

1/9

Ideally, we’d govern AI in a way that both:

- Accelerates AI development and deployment

- Quantifies and prevents the risks that really matter

Insurers want fast AI adoption (they can sell more AI insurance) -- and to avoid serious incidents (they pay the bill)

In fact, insurance has played this role for new technology waves for at least 270 years:

Fire: In the 1700s, Philadelphia’s population grew tenfold. As the city grew, the wooden houses got packed more closely. Fire ravaged the city. In 1752, Benjamin Franklin tamed the fires by creating America’s first fire insurer. The insurer had skin in the game to prevent fires - they paid for damages. Franklin created early building codes to design safer houses, and enforced them through fire inspections. Taming the fires enabled Philadelphia to continue to grow.

Cars: In postwar America, car accident deaths rose sharply. Car insurers paid the financial bill. In 1959, they created the Insurance Institute of Highway Safety. Insurers developed standards for car crash testing that incentivized car manufacturers to develop safer cars. They created financial incentives for seatbelt and airbag adoption before regulation came in. Better cars made it safe for cars to become commonplace - and helped save hundreds of thousands of lives.

Nuclear energy: In 1957, the Price-Anderson Act created the private nuclear energy industry in America. At its heart is an insurance scheme. It was designed to make it possible for nuclear operators to run risky power plants with market oversight, while financially protecting victims in case of an accident. Private insurance covers accidents up to $16bn before a government backstop kicks in to cover catastrophic risks. This gives the government the benefit of well-incentivized private market actors that oversee the day-to-day risk management.

Back to AI:

How, exactly, could insurance balance AI progress and security?

Here's the gist:

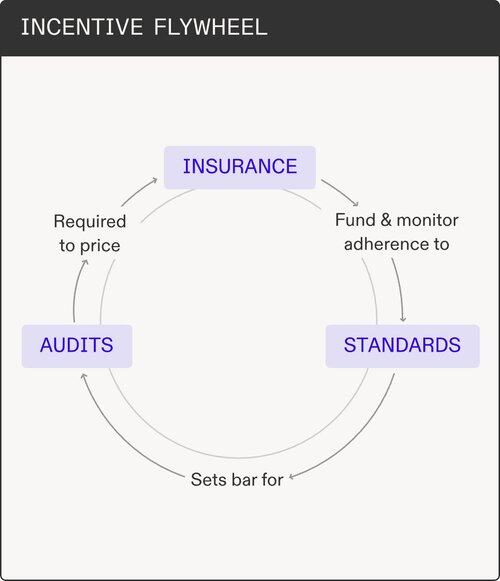

Insurers have incentives and power to enforce that the companies they insure take action to prevent the risks that matter. They enforce security through an incentive flywheel:

Insurers create standards. Standards outline which risks matter and what companies should do to prevent them. AI companies want to certify against standards because it helps them earn confidence from their customers and because it makes them eligible for insurance - just like we see in cyber security today with SOC 2.

Standards then set the bar for audits. Insurers audit risks before they insure them. They get access to private information so they can see if the standards are actually met. Audits give standards teeth for enforcement.

Audits in turn let insurers price the risk more accurately. When they get it wrong, they update the standards to reflect the real risks.

This flywheel is most clearly emerging in AI agents for commercial risks - but it extends to labs and data centers for catastrophic risks.

The AI incentive flywheel is emerging:

-GitHub insures customers to win trust

-Labs are coalescing on Safety Commitments, i.e. early standards

-METR is pushing technical evaluations to enable better audits

-RAND sets a foundation for data center standards

99,42K

Johtavat

Rankkaus

Suosikit