Актуальні теми

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Найбільшим питанням у дослідженнях RL завжди було – в якому середовищі ви тренуєтеся?

Раніше це були відео (Atari) і настільні (Go / Chess) ігри.

Але тепер, коли RL працює з LLM, є лише одне середовище, яке має значення. І це ваш продукт.

10 лип., 00:01

Why you should stop working on RL research and instead work on product //

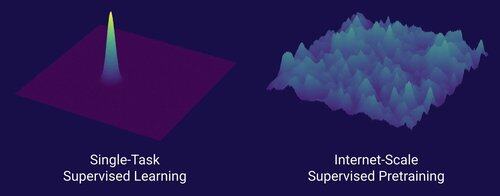

The technology that unlocked the big scaling shift in AI is the internet, not transformers

I think it's well known that data is the most important thing in AI, and also that researchers choose not to work on it anyway. ... What does it mean to work on data (in a scalable way)?

The internet provided a rich source of abundant data, that was diverse, provided a natural curriculum, represented the competencies people actually care about, and was an economically viable technology to deploy at scale -- it became the perfect complement to next-token prediction and was the primordial soup for AI to take off.

Without transformers, any number of approaches could have taken off, we could probably have CNNs or state space models at the level of GPT-4.5. But there hasn't been a dramatic improvement in base models since GPT-4. Reasoning models are great in narrow domains, but not as huge of a leap as GPT-4 was in March 2023 (over 2 years ago...)

We have something great with reinforcement learning, but my deep fear is that we will repeat the mistakes of the past (2015-2020 era RL) and do RL research that doesn't matter.

In the way the internet was the dual of supervised pretraining, what will be the dual of RL that will lead to a massive advancement like GPT-1 -> GPT-4? I think it looks like research-product co-design.

10,58K

Найкращі

Рейтинг

Вибране