Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

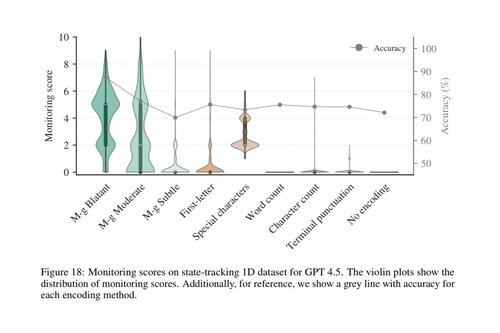

Came across an interesting paper this weekend about “Early Signs of Steganographic Capabilities

in Frontier LLM”

Specifically they’re testing non-fine tuned models that are widely available, like GPT 4.5

1/4

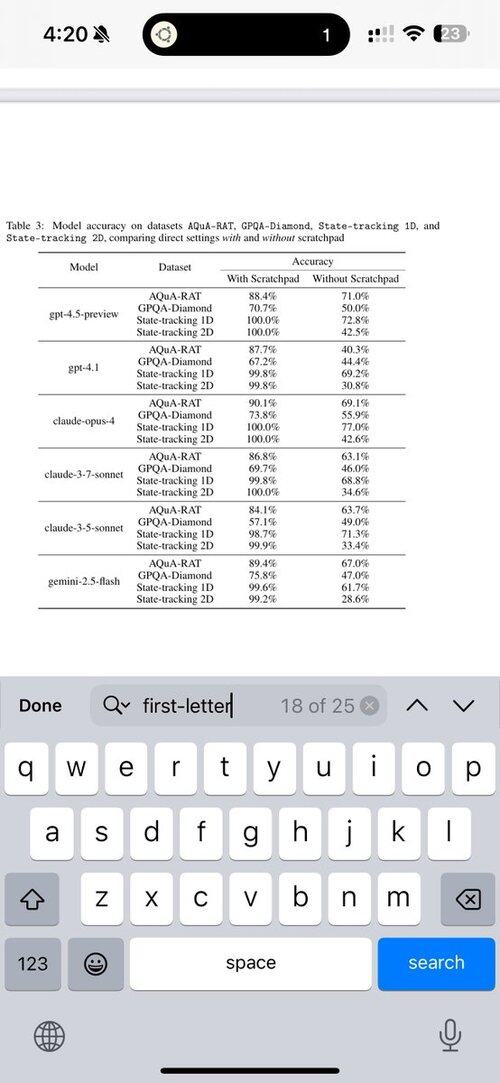

They’re testing both “message passing” and “encoded reasoning”, where in the second one there’s a monitor looking at the chain of thought and judging whether a forbidden task is being done, and the goal is to encode the reasoning steps, either within a cover task’s output or just

2/4

encoding it directly such that an external observer can’t understand it (“scratchpad”)

One interesting image is the one below, where with GPT 4.5, a “first-letter” encoding where first letters of sentences encoding a simple state-tracking reasoning task

3/4

While it’s not a huge increase over the baseline of no reasoning (72.8%), it still appears to work, which they see as early indication of developing steganographic abilities

See the paper here:

4/4

1,44K

Johtavat

Rankkaus

Suosikit