Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Fast blockchains introduce new challenges for bandwidth management and RPC fairness. Today we’re introducing a mechanism for shaping RPC access using liquid staking commitments. The system is live via FastLane’s ShMonad RPC. This thread explores the architecture and rationale.

🧵

High-throughput networks like Monad (~0.5s block time, ~1s finality) leave little room for reactive throttling. By the time an RPC endpoint detects it's under spam attack, damage has already been done. Mitigation must be proactive and incentive-aligned.

/2

The key constraint is bandwidth. Validator-adjacent nodes are resource-constrained and latency-sensitive. If permissionless access is granted indiscriminately, adversarial clients can crowd out honest participants—resulting in degraded UX and validator costs without recourse.

/3

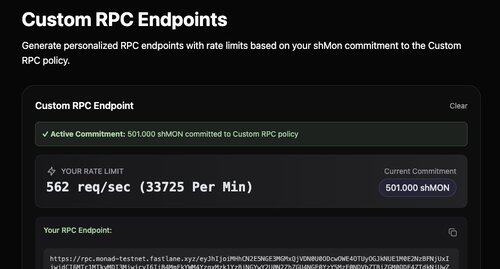

Our solution leverages ShMonad, a programmable liquid staking token (LST) with on-chain commitment capabilities. Users receive a private RPC URL in exchange for committing ShMON to an on-chain “RPC Policy.” This commitment governs access rate limits.

/4

Bandwidth is allocated proportionally:

user's RPS = (user’s committed ShMON / total committed ShMON) × RPS_max-global

This yields a dynamically shareable, stake-weighted bandwidth model without introducing centralized off-chain rate-limiters.

5/

Stake is committed for a duration (currently 20 blocks), which enables caching. The relay intermittently polls and snapshots the on-chain commitment state. This prevents EVM calls in the critical path and supports high-frequency use without additional latency.

6/

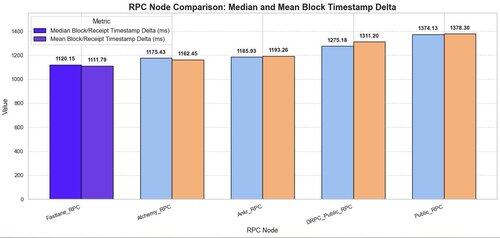

Empirically, this system results in consistently lower latency. Across multiple independent benchmarking sessions, FastLane’s ShMonad RPC exhibits ~20ms lower median/mean response time than the second-fastest provider, with a larger gap against the public RPCs.

7/

ShMON committed to the RPC policy is staked with validators participating in the FastLane relay network (currently >90% of Monad validators). This creates alignment: bandwidth consumers support the same validators who serve their traffic, and validators have the potential to be compensated directly via overage penalties.

8/

But to enforce bandwidth limits credibly and trustlessly, we need more than rate limits... we need provable enforcement. For now, users are throttled at the relay. But the roadmap includes on-chain proof systems based on nonce deltas and signed usage receipts.

9/

A minimal design could compare account nonces between block heights n and m, and slash (i.e., 'apply surcharge' and give it to the validator) excess usage above the max RPS. But there's an issue: this is vulnerable to batch-release attacks by a relay making the txs appear bursty.

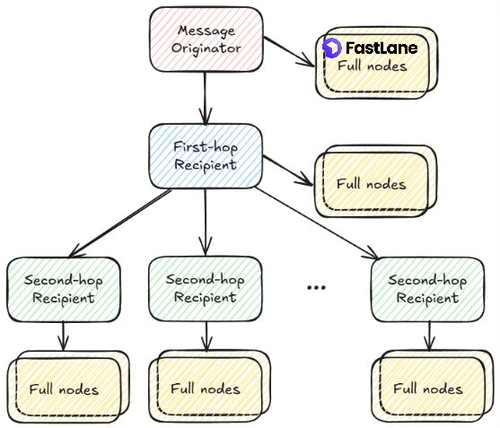

To mitigate this, we introduce a second channel: asynchronous timestamped usage receipts. When a transaction is submitted, it will be multicast to both the validator and a separate “receipt issuer.” The issuer returns a signed object to the sender, timestamped and including pre-execution nonce metadata. It takes the tracking and verification overhead out of the hot path between the user and the validator.

11/

These receipts (which will be signed) serve a dual purpose:

1. User feedback: If receipts cease arriving, clients can voluntarily halt traffic to avoid overage fees.

2. On-chain proof: Receipts anchor temporal activity, disambiguating real spam from relay-induced batching.

12/

This model supports both EOAs and 4337 userOps (assuming non-shared bundles or vertical integration with our own paymaster). In future versions, we may enforce that the transaction signer matches the policy holder or was whitelisted during policy commitment. TBD.

13/

Our goal is to move enforcement on-chain without sacrificing performance. Thanks to Monad’s abundant blockspace and fast finality, submitting state proofs, verifying receipts, and charging overage fees is viable on-chain... something infeasible on higher-cost networks.

14/

Overage penalties (analogous to congestion pricing) are still under design. We are awaiting Monad’s finalized fee market structure before finalizing a surcharge schedule - it wouldn't make sense for us to design the overage fee without knowing what the baseline fee is.

15/

RPC throughput is currently measured in aggregate (txs + eth_call), but future upgrades will disaggregate bandwidth classes. Read requests will be routed through regionally optimized nodes, removing them from the bottleneck created by the validator's bandwidth constraints.

16/

For latency-sensitive applications (e.g. full nodes, market makers), we support peering and direct block feed via p2p. For full blocks, propagation priority will be stake-weighted (LSWQoS): users with higher committed ShMON receive blocks marginally earlier, subject to inclusion thresholds.

17/

This represents a departure from traditional “best effort” RPC. With read requests to an RPC, the committed stake amount determines the number of requests. For blocks sent from our nodes, the committed stake amount determines the order of sending.

18/

Trustless access control is viable on high throughput chains if incentives, enforcement, and observability are designed from first principles. The ShMonad RPC is a reference implementation of that thesis. We look forward to iteration and external scrutiny.

19/

6,6K

Johtavat

Rankkaus

Suosikit