Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

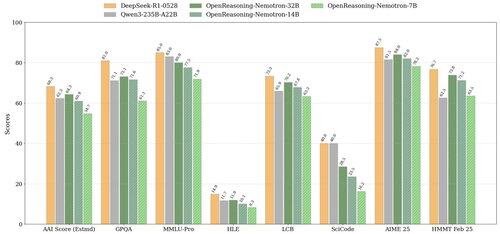

📣 Announcing the release of OpenReasoning-Nemotron: a suite of reasoning-capable LLMs which have been distilled from the DeepSeek R1 0528 671B model. Trained on a massive, high-quality dataset distilled from the new DeepSeek R1 0528, our new 7B, 14B, and 32B models achieve SOTA perf on a wide range of reasoning benchmarks for their respective sizes in the domain of mathematics, science and code. The models are available on @huggingface🤗:

10,31K

Johtavat

Rankkaus

Suosikit