Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

SB1047 was a bad idea. But Sen. Wiener's latest SB53 is on the right track, and it's important to call out the progress. Here's my reasoning.

My approach to regulating novel technology like models is: we don't know how to define "good" mitigation and assurance, but we'll know it when—and if—we see it.

There are two implications.

#1. We shouldn't prescribe risk thresholds or standards of care for model development. We can't agree on the risks that matter, how to measure them, or how much is too much. The only guidance for developers, regulators, and courts is a set of nascent practices determined primarily by closed-source firms relying on paywalls to do the heavy lifting. Doing so could chill open innovation by exposing developers to vague or heightened liability for widespread release.

That was SB1047 in a nutshell, along with ~5 equivalents it inspired across the US this session, such as the RAISE Act in NY. We should avoid that approach. These proposals are—in narrow but crucial respects—too far over their skis.

And yet:

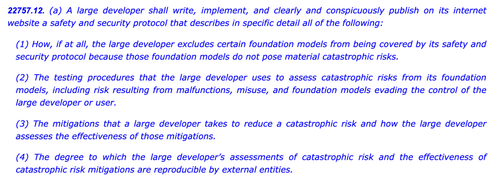

#2. We need to shine a light on industry practices to better understand the diligence, or lack thereof, applied by different firms. If developers have to commit to a safety and security policy, show their working, and leave a paper trail, we can better assess the strength of their claims, monitor for emerging risk, and decide on future intervention.

That is the EU's AI Act and final Code of Practice in a nutshell, which both OpenAI and Mistral have endorsed, and it's @Scott_Wiener's latest version of SB53 too.

If we're going to regulate model development, that is fundamentally the better approach: regulating transparency—not capabilities, mitigations, or acceptable risk. It would give at least one US jurisdiction the oversight authority of Brussels, and it would avoid unintended effects on open development.

To be clear, there are still icebergs ahead:

> Complexity. Big Tech or not, these are onerous documentation and reporting obligations. Tactically speaking, the more complex, the more vulnerable this bill will become.

> Incentives. Mandatory public reporting of voluntary risk assessments creates a perverse incentive for developers to under-test their models, and turn a blind eye to difficult risks. Permitting developers to disclose their results to auditors or agencies rather than publicly may help to promote greater candor in their internal assessments.

> Trojan horse. California's hyperactive gut-and-amend culture can make it difficult to vet these bills. If SB53 morphs into a standard of care bill like SB1047 or RAISE, it should be knocked back for the same reasons as before. The more baubles are added to this Christmas tree, the more contentious the bill.

> Breadth. The bill casts a wide net with expansive definitions of catastrophic risk and dangerous capability. For a "mandatory reporting / voluntary practices" bill, they work. If this bill was a standard of care bill, they would be infeasible.

In sum: hats off to Sen. Wiener for thoughtfully engaging and responding to feedback over the past year. It's refreshing to see a bill that actually builds on prior criticism. There are still many paths this bill could take—and it has evolved well beyond the original whistleblowing proposal—but the trajectory is promising.

5,99K

Johtavat

Rankkaus

Suosikit