Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

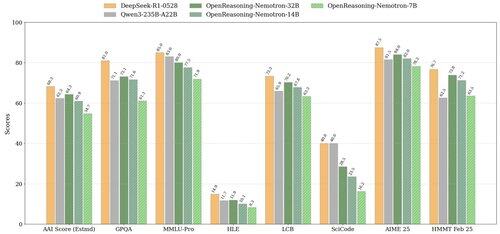

missed this, @NVIDIAAIDev silently dropped Open Reasoning Nemotron models (1.5-32B), SoTA on LiveCodeBench, CC-BY 4.0 licensed 🔥

> 32B competing with Qwen3 235B and DeepSeek R1

> Available across 1.5B, 7B, 14B and 32B size

> Supports upto 64K output tokens

> Utilises GenSelect (combines multiple parallel generations)

> Built on top of Qwen 2.5 series

> Allows commercial usage

Works out of the box in transformers, vllm, mlx, llama.cpp and more!

7,64K

Johtavat

Rankkaus

Suosikit