Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Traditional RAG vs. Graph RAG, clearly explained (with visuals):

top-k retrieval in RAG rarely works.

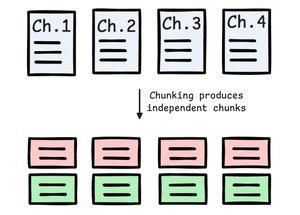

Imagine you want to summarize a biography where each chapter details a specific accomplishment of an individual.

Traditional RAG struggles because it retrieves only top-k chunks while it needs the entire context.

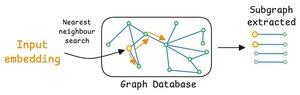

Graph RAG solves this by:

- Building a graph with entities and relationships from docs.

- Traversing the graph for context retrieval.

- Sending the entire context to the LLM for a response.

The visual shows how its different from naive RAG:

Let's see how Graph RAG solves the above problem.

First, a system (typically an LLM) will create a graph from documents.

This graph will have a subgraph for the person (P) where each accomplishment is one-hop away from the entity node of P.

During summarization, the system can do a graph traversal to fetch all the relevant context related to P's accomplishments.

The entire context will help the LLM produce a complete answer, while naive RAG won't.

Graph RAG systems are also better than naive RAG systems because LLMs are inherently adept at reasoning with structured data.

I hope this clarifies what Graph RAG is and the problems it can solve!

I'll leave you with a visual representation of how it works compared to traditional RAG.

If you found it insightful, reshare with your network.

Find me → @akshay_pachaar ✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

29.7. klo 21.11

Traditional RAG vs. Graph RAG, clearly explained (with visuals):

212,24K

Johtavat

Rankkaus

Suosikit