Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

this seems really important:

it is totally plausible that a model could get IMO gold without *any* reinforcement learning, given a perfectly-crafted prompt

we just don't know, and lack tools to efficiently search through prompt space. glad to see at least someone is trying

29.7.2025

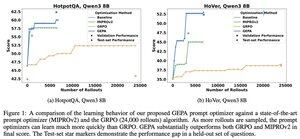

How does prompt optimization compare to RL algos like GRPO?

GRPO needs 1000s of rollouts, but humans can learn from a few trials—by reflecting on what worked & what didn't.

Meet GEPA: a reflective prompt optimizer that can outperform GRPO by up to 20% with 35x fewer rollouts!🧵

38,46K

Johtavat

Rankkaus

Suosikit