Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

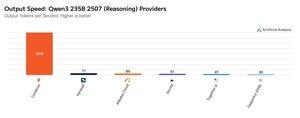

Cerebras has been demonstrating its ability to host large MoEs at very high speeds this week, launching Qwen3 235B 2507 and Qwen3 Coder 480B endpoints at >1,500 output tokens/s

➤ @CerebrasSystems now offers endpoints for both Qwen3 235B 2507 Reasoning & Non-reasoning. Both models have 235B total parameters with 22B active.

➤ Qwen 3 235B 2507 Reasoning offers intelligence comparable to o4-mini (high) & DeepSeek R1 0528. The Non-reasoning variant offers intelligence comparable to Kimi K2 and well above GPT-4.1 and Llama 4 Maverick.

➤ Qwen3 Coder 480B has 480B total parameters with 35B active. This model is particularly strong for agentic coding and can be used in a variety of coding agent tools, including the Qwen3-Coder CLI.

Cerebras’ launches represent the first time this level of intelligence has been accessible at these output speeds and have the potential to unlock new use cases - like using a reasoning model for each step of an agent without having to wait minutes.

25,07K

Johtavat

Rankkaus

Suosikit