Trending topics

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Nathan Lambert

Figuring out AI @allen_ai, open models, RLHF, fine-tuning, etc

Contact via email.

Writes @interconnectsai

Wrote The RLHF Book

Mountain runner

for your entertainment :)

AI EngineerJul 20, 05:31

🆕 Releasing our entire RL + Reasoning track!

featuring:

• @willccbb, Prime Intellect

• @GregKamradt, Arc Prize

• @natolambert, AI2/Interconnects

• @corbtt, OpenPipe

• @achowdhery, Reflection

• @ryanmart3n, Bespoke

• @ChrSzegedy, Morph

with special 3 hour workshop from:

@danielhanchen of Unsloth!

start here:

Happy weekend watching! and thanks to @OpenPipeAI for supporting and hosting this track!

8.06K

The point of this is to avoid psyops not to take away from an obvious, major technical accomplishment, cmon fam I'm not an AI hater

so many haters in the replies

Nathan LambertJul 19, 21:23

Not falling for OpenAI’s hype-vague posting about the new IMO gold model with “general purpose RL” and whatever else “breakthrough.” Google also got IMO gold (harder than mastering AIME), but remember, simple ideas scale best.

11.56K

Nathan Lambert reposted

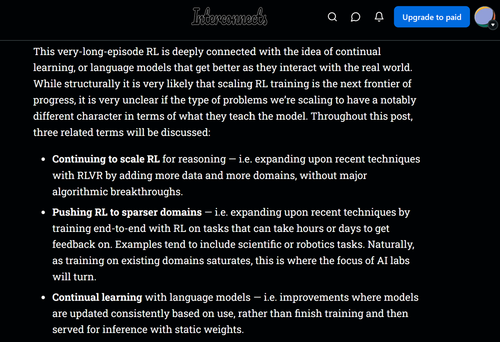

Has OpenAI achieved very-long-episode RL with this experimental model?

Screenshot from @natolambert's article on "What comes next with reinforcement learning".

Nathan says in this article - Where current methods are generating 10K-100K tokens per answer for math or code problems during training, the sort of problems people discuss applying next generation RL training to would be 1M-100M tokens per answer. This involves wrapping multiple inference calls, prompts, and interactions with an environment within one episode that the policy is updated against.

Maybe this breakthrough is a combination of both - very-long-episode RL & scaling TTC to 1M-100M tokens per answer!

8.87K

Top

Ranking

Favorites

Trending onchain

Trending on X

Recent top fundings

Most notable