Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Andrej Karpathy

Building @EurekaLabsAI. Previously Director of AI @ Tesla, founding team @ OpenAI, CS231n/PhD @ Stanford. I like to train large deep neural nets 🧠🤖💥

Diffusion video models but now - **realtime**!

Simple video filters are real-time but can only do basic re-coloring and styles. Video diffusion models (Veo and friends) are magic, but they take many seconds/minutes to generate. MirageLSD is real-time magic. Unlike simple video filters, diffusion models actually *understand* what they are looking at, so they can style all parts of the feed intelligently (e.g. putting hats on heads, or light sabers into hands, etc.). And they are arbitrarily steerable, e.g. by text prompts.

Customizable, intelligent video filters unlock many cool ideas over time:

- transform camera feeds into alternate realities

- direct and shoot your own movies, acting out scenes with props. Realtime => instant feedback/review.

- vibe code games around just simple spheres/blocks, then use a real-time diffusion model to texture your game to make it beautiful.

- style and customize any video feed: games, videos, ... e.g. Skyrim but "MORE EPIC"? DOOM II but modern Unreal Engine quality with just a prompt? Horror movie but "cute, pink and bunnies only"? I don't know!

- zoom call backgrounds+++

- real-time try on clothes virtually

- glasses: e.g. cartoonify your vision in real time?

- we can now build Harry Potter Mirror of Erised, showing the "raw feed" of you in the mirror but augmented with your deepest desires (as inferred by the AI).

- I don't know, I'm probably missing the biggest one, so many things!

(Disclosure I am (very small) angel investor in Decart, I was excited because imo this technology will get very good very fast and it feels general, powerful but it's also technically very difficult. Congrats on the launch to the team!)

Decart18.7. klo 04.44

Introducing MirageLSD: The First Live-Stream Diffusion (LSD) AI Model

Input any video stream, from a camera or video chat to a computer screen or game, and transform it into any world you desire, in real-time (<40ms latency).

Here’s how it works (w/ demo you can use!):

357,68K

I often rant about how 99% of attention is about to be LLM attention instead of human attention. What does a research paper look like for an LLM instead of a human? It’s definitely not a pdf. There is huge space for an extremely valuable “research app” that figures this out.

Michael Levin10.7. klo 22.47

I'm constantly irritated that I don't have time to read the torrent of cool papers coming faster and faster from amazing people in relevant fields. Other scientists have the same issue and have no time to read most of my lengthy conceptual papers either. So whom are we writing these papers for?

I guess, at least until they fall in to the same issue from their own work, AI's will be the only ones who actually have the bandwidth to read all this stuff. I'm not specifically talking about today's language models - let's assume we mean whatever inevitable AI shows up, that is able to read the literature and have impact on the research (whether by talking to humans or by running lab automation/robot scientist platforms).

So then: how should we be writing, knowing that a lot of our audience will be AI (plus cyborgs, hybrots, augmented humans, etc.)? Maybe it's too early to know what to do, but we better start thinking about it because assuming our audience will always be today's humans seems untenable. Taking seriously the idea that someday the impactful audience will be very different, and that the things we write now are in some sense a training set for truly diverse future beings, how does our writing change? or does it?

what say you @danfaggella @mpshanahan @Plinz @blaiseaguera ?

498,14K

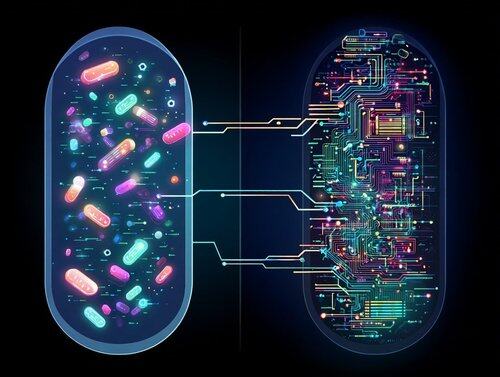

How to build a thriving open source community by writing code like bacteria do 🦠. Bacterial code (genomes) are:

- small (each line of code costs energy)

- modular (organized into groups of swappable operons)

- self-contained (easily "copy paste-able" via horizontal gene transfer)

If chunks of code are small, modular, self-contained and trivial to copy-and-paste, the community can thrive via horizontal gene transfer. For any function (gene) or class (operon) that you write: can you imagine someone going "yoink" without knowing the rest of your code or having to import anything new, to gain a benefit? Could your code be a trending GitHub gist?

This coding style guide has allowed bacteria to colonize every ecological nook from cold to hot to acidic or alkaline in the depths of the Earth and the vacuum of space, along with an insane diversity of carbon anabolism, energy metabolism, etc. It excels at rapid prototyping but... it can't build complex life. By comparison, the eukaryotic genome is a significantly larger, more complex, organized and coupled monorepo. Significantly less inventive but necessary for complex life - for building entire organs and coordinating their activity. With our advantage of intelligent design, it should possible to take advantage of both. Build a eukaryotic monorepo backbone if you have to, but maximize bacterial DNA.

538,8K

The race for LLM "cognitive core" - a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing.

Its features are slowly crystalizing:

- Natively multimodal text/vision/audio at both input and output.

- Matryoshka-style architecture allowing a dial of capability up and down at test time.

- Reasoning, also with a dial. (system 2)

- Aggressively tool-using.

- On-device finetuning LoRA slots for test-time training, personalization and customization.

- Delegates and double checks just the right parts with the oracles in the cloud if internet is available.

It doesn't know that William the Conqueror's reign ended in September 9 1087, but it vaguely recognizes the name and can look up the date. It can't recite the SHA-256 of empty string as e3b0c442..., but it can calculate it quickly should you really want it.

What LLM personal computing lacks in broad world knowledge and top tier problem-solving capability it will make up in super low interaction latency (especially as multimodal matures), direct / private access to data and state, offline continuity, sovereignty ("not your weights not your brain"). i.e. many of the same reasons we like, use and buy personal computers instead of having thin clients access a cloud via remote desktop or so.

1,03M

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin