Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

My inaugural @delphi_intel post is on Gen AI Video Models. Summary below (1/10)👇

s/o to @moonshot6666 for his feedback on this essay & @PJaccetturo for this dope hype video I will shamelessly steal.

TLDR: videos are 2-3 years behind text. They are getting very good, very fast

1. Humans are visual creatures.

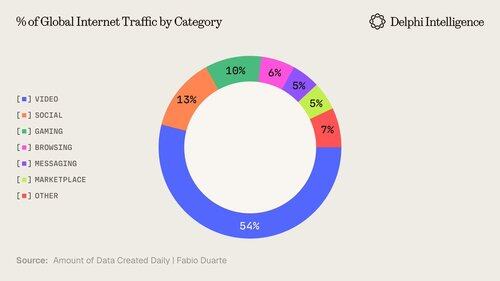

Gen AI content modality will likely trend towards a distribution similar to the web:

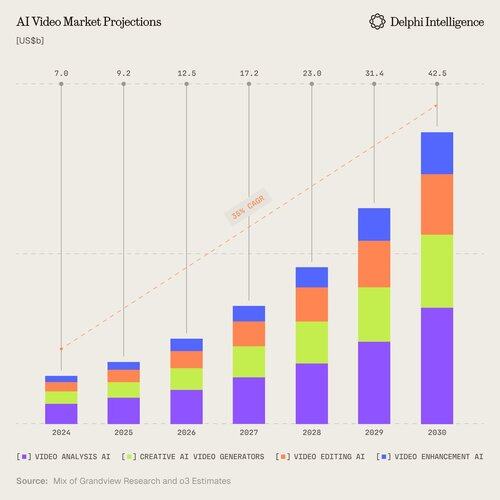

2. Market is big: >US$40b+ billion by 2030 (with relevant adjacencies).

The disruption in marketing, entertainment, and education is obvious, but also clear implications in robotics, surveillance, and long-horizon agentic tasks

3. Landscape has a lot of cross-stack competition

Includes model companies, multi-modal apps, talking avatars, lip sync and more. Not to mention all of the infra required to fuel true multimodal models and apps.

s/o to @venturetwins and @a16z for the map

4. East vs. West.

The race is largely split between the US and China. In video models, China is leading (9 of the top 15 models)

s/o @ArtificialAnlys

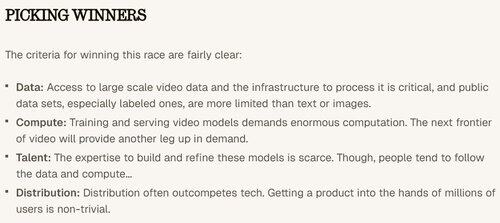

6. Big Tech Advantage: piping these new capabilities to >1b user ecosystems will be high ROI

Based on the below attributes, its not shocking that large players like $Goog, $meta, $techy, $baba, and Bytedance are well positioned.

7. Value accrual:

At the same time, we see pockets across the stack - particularly in infra and apps - where new entrants can carve out large businesses

(Yes, you will need to read the actual report for any alpha, lazy ass)

8. True multi-modality

Today, video models look like a toy. But video data and simulations are an increasingly essential input for long-form agentic tasks and in kickstarting the robotics revolution.

Video models might just prove the spark.

3,98K

Johtavat

Rankkaus

Suosikit