Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

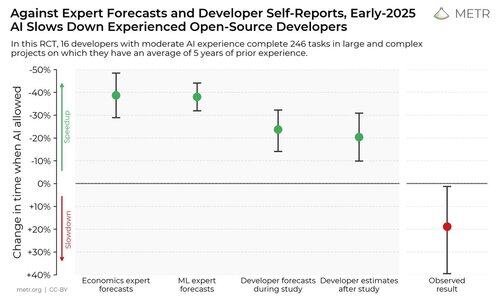

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers.

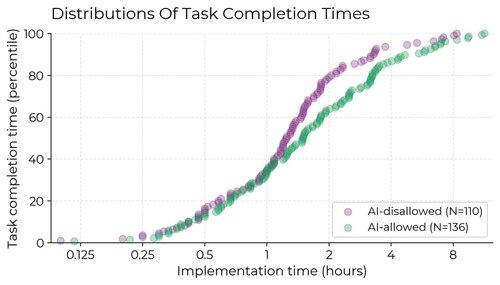

The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

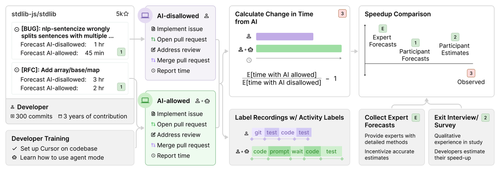

We recruited 16 experienced open-source developers to work on 246 real tasks in their own repositories (avg 22k+ stars, 1M+ lines of code).

We randomly assigned each task to either allow AI (typically Cursor Pro w/ Claude 3.5/3.7) or disallow AI help.

At the beginning of the study, developers forecasted that they would get sped up by 24%. After actually doing the work, they estimated that they had been sped up by 20%. But it turned out that they were actually slowed down by 19%.

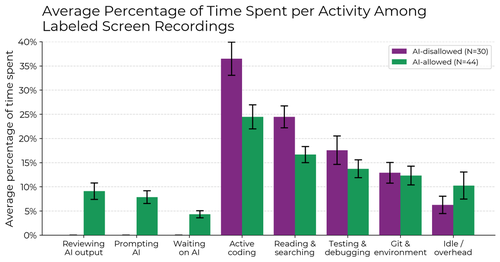

When AI is allowed, developers spend less time actively coding and searching for information, and instead spend time prompting AI, waiting on/reviewing AI outputs, and idle. We find no single reason for the slowdown—it’s driven by a combination of factors.

Why did we run this study?

AI agent benchmarks have limitations—they’re self-contained, use algorithmic scoring, and lack live human interaction. This can make it difficult to directly infer real-world impact.

If we want an early warning system for whether AI R&D is being accelerated by AI itself, or even automated, it would be useful to be able to directly measure this in real-world engineer trials, rather than relying on proxies like benchmarks or even noisier information like anecdotes.

What do we take away?

1. It seems likely that for some important settings, recent AI tooling has not increased productivity (and may in fact decrease it).

2. Self-reports of speedup are unreliable—to understand AI’s impact on productivity, we need experiments in the wild.

401,37K

Johtavat

Rankkaus

Suosikit