Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

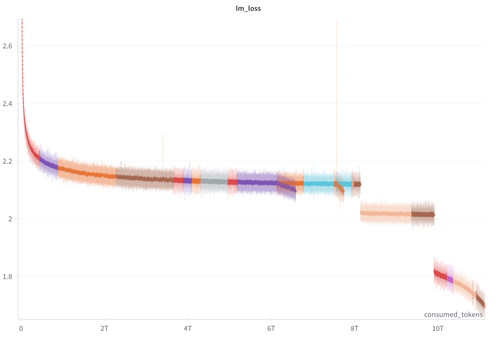

We've just release 100+ intermediate checkpoints and our training logs from SmolLM3-3B training.

We hope this can be useful to the researcher working on mech interpret, training dynamics, RL and other topics :)

Training logs:

-> Usual training loss (the gap in the loss are due to change of mixture), grad_norm ect..

-> Per layer/block metrics (l1/l2 norm, mean, min, max, kurtosis)

Checkpoints:

-> pre-training every 40k step (94.4B tokens)

-> long context extension every 4k step (9.4B tokens)

-> post-training: SFT, mid-training, APO soup, LC expert

30,61K

Johtavat

Rankkaus

Suosikit