Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

I increasingly think the skill cap for LLM-assisted engineering is probably about as high as for engineering generally. (It’s certainly deeper than e.g. Excel, and there are OOMs in Excel proficiency.)

10 tuntia sitten

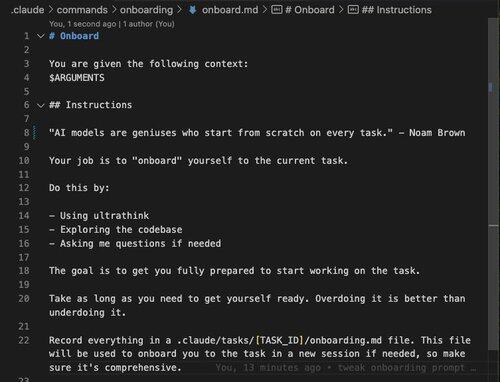

To 10x AI coding agents you need to *obsess* over context engineering above all else.

Great Context = Great Plan = Great Result

AI models are geniuses who start from scratch every time.

So onboard them by going overboard on context.

Use this prompt as a starting point.

Skill cap is a gamerism. It captures the intuition that there are some games where the difference between best player and worst player is marginal, tic tac toh, and others where this is very untrue and one can study the game for a lifetime while improving continually (poker, go).

This isn’t merely at the level of the micro mechanics or prompt engineering or context engineering. There are entire modes of working that lean into what the LLM does best, and the amount of time you can spend on them is not fixed across all sessions or projects.

And so given that you have control or influence over the totality of the system, you have metadecisions to make on “Should I want this system to have more sub components of the sort that LLMs just *crank* through? Or should I make other architecture or similar choices?”

And yeah we’re years from learning what the maintenance loop looks like for LLM-delivered subsystems but the smart money is probably “A human may be aware in a general sense that it is happening.”

Anyhow this stuff is very, very fun and I find myself in a weird place where I wish vacation was over so I could be writing code for free.

(As one of the many adaptations to this style, it benefits from multi-hour blocks of uninterrupted time because the agents thrive on context and the first few minutes of a session suck. This is tough to deliver from kitchen table at in-law’s.)

30,02K

Johtavat

Rankkaus

Suosikit