Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Some personal news: Since leaving OpenAI, I’ve been writing publicly about how to build an AI future that’s actually exciting: avoiding the worst risks and building an actually good future.

I’m excited to continue this work as a fellow of the Roots of Progress Institute.

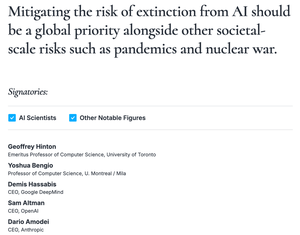

When I left OpenAI, I wrote that I’m pretty terrified of where AI is headed. This is still true, and I know so many AI lab employees who want this all to stop, but feel individually powerless.

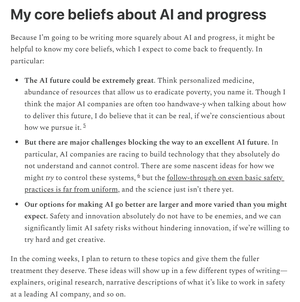

But I also believe in a deeply positive future with AI, if risks are handled well.

To be clear, I think the AI labs today are way off-track for achieving a positive future: They aren't taking AI’s risks seriously enough, and they aren’t making plans that are actually exciting.

A simple way of describing the risks: AI companies are racing to build technology that they absolutely do not understand and cannot control, but believe could be extremely destructive.

And in terms of benefits: Asserting that AI will solve climate change does not make it so.

In my writing, I aim to give you the honest, clear-eyed take on what’s happening in AI, from someone who’s worked in the field for nearly a decade now.

Where are AI companies playing word games? What types of risk-reduction are easy? And what am I so concerned about anyway?

I'm excited to be joining a community of other writers, but be assured, I can still say whatever I'd like.

There's full independence: The only thing that should change is, hopefully, some improvement to my writing style.

If you want to follow along, you can subscribe to Clear-Eyed AI at that proverbial other site (click it from my bio).

It’s free; I just ask that you share the word with others who might like it too.

I also never properly introduced myself there - and so today’s post is about my AI safety origin story, and some of the core ideas that drive my thinking.

Also, a bonus story about the surprising relationship between AI safety and “Chinese chicken salad.”

15,98K

Johtavat

Rankkaus

Suosikit