Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

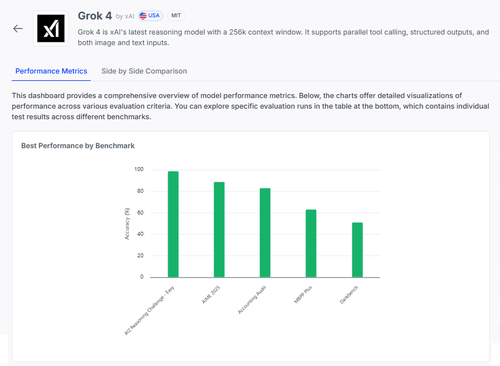

🧠 Grok 4 by @xai is making strides in reasoning benchmarks, but the picture is more nuanced than the scores suggest.

Here’s how it stacks up — and what we can really learn from its results 🧵

📊 Full eval:

1️⃣ Grok 4 scores:

• AI2 Reasoning Challenge (Easy): 98%

• AIME 2025 (Math): 89%

• Accounting Audit: 84%

• MMLU-Plus: 64%

• Data4Health: 55%

These are top-line scores — but let’s zoom in on what’s working and what still fails.

2️⃣ AIME 2025

✅ Handles algebra, geometry, number theory

✅ Follows LaTeX formatting rules

❌ Struggles with multi-step logic

❌ Errors in combinatorics

❌ Format precision issues (e.g. missing °)

3️⃣ Accounting Audit

✅ Strong on ethics & reporting

✅ Solid grasp of auditing principles

❌ Misinterprets similar procedures

❌ Fails to spot subtle answer differences

❌ Hard time applying theory to real-world cases

4️⃣ The real insight?

Even a model with 98% on some tasks can fail hard under ambiguity or formatting stress.

Benchmarks like AIME and Audit show how it fails, not just how much it scores.

5️⃣ Why this matters:

We need transparent, per-task evaluation — not just leaderboards.

#Grok4 is powerful, but still brittle in high-stakes, real-world domains.

🧪 Explore the full breakdown:

#AI #LLMs #Benchmarking

1,03K

Johtavat

Rankkaus

Suosikit