Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Atropos v0.3 is now out!

Our RL Environments framework has seen a lot of upgrades since v0.2 - some highlights:

- Atropos can now be used as a benchmarking and evaluations framework by @rogershijin, with our first external benchmark, Reward-Bench 2!

- Added the Reasoning Gym, an external environment gym repo ported into atropos with over 100 reasoning tasks by @neurosp1ke and friends

- @max_paperclips integrated @intern_lm's reasoning bootcamp, adding 1000+ new reasoning tasks for RL

- @dmayhem93 the lead engineer of Atropos added dozens of bug fixes and other reliability and compatability improvements, better support for multi-environment, and CI/CD

- Many of the Atropos hackathon environments have been merged into /environments/community - to list them all would take up most of the screen space, but some highlights:

VR-CLI by @JakeABoggs, Philosophy RLAIF, Adaptive LLM Teachers, WebVoyager, protein design by @hallerite, a model routing environment by @gabinfay, multiple on lean proving, the catbot arena, pokemon showdown, poker, helpful doctors, sanskrit poetry by @khoomeik and so much more!

- Other notable officially supported new environments include:

Answer format following environment

Pydantic to JSON environment ported from @MatternJustus work

Instruction Following ported from @natolambert and @allen_ai's work

Letter Counting

- 47 brand new contributors!

Check out the complete changelog here:

18.7. klo 03.22

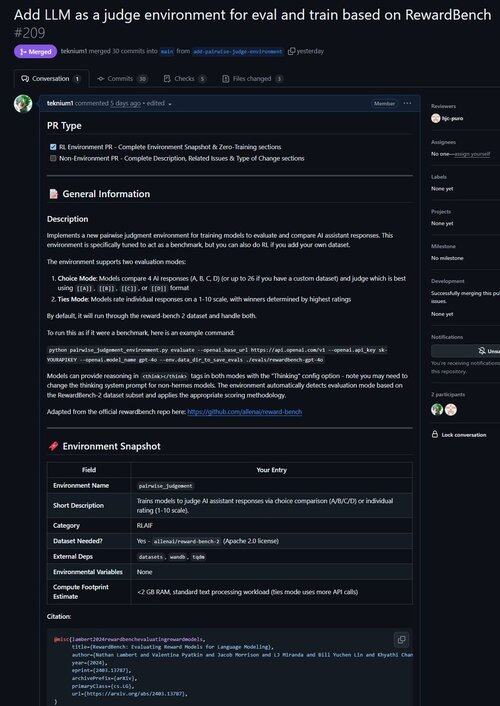

Just merged a PR for an environment to improve LLM as a Judge as well as evaluate models on their capability of doing judgements!

Did you know that all verifiable RL environments are nearly equivalent to benchmarks (and vice-versa!)? So we added an evaluate command to Atropos' base and now you can run benchmarks through Atropos environments.

We got frustrated with working with so many benchmark frameworks that were outdated or unusable, so we implemented evaluation-only mode into Atropos, our RL environments framework.

So our first port from outside our existing environments was @natolambert's Reward-Bench!

Note: it only supports generative reward models (regular LLM Judges) at the moment.

Check out the PR here:

24,88K

Johtavat

Rankkaus

Suosikit