Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

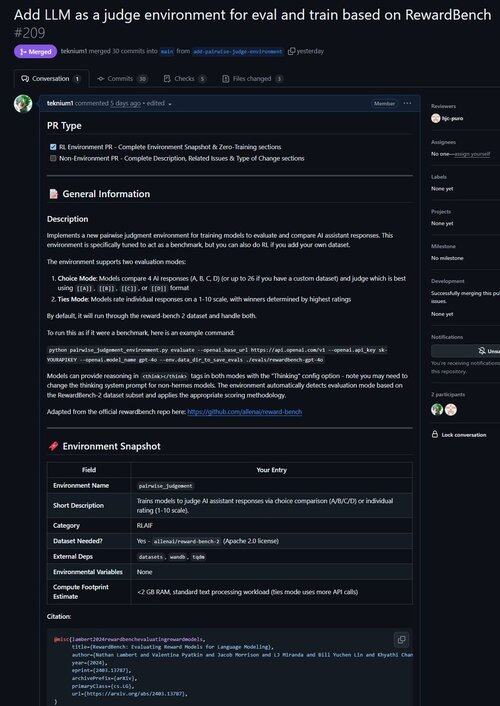

Just merged a PR for an environment to improve LLM as a Judge as well as evaluate models on their capability of doing judgements!

Did you know that all verifiable RL environments are nearly equivalent to benchmarks (and vice-versa!)? So we added an evaluate command to Atropos' base and now you can run benchmarks through Atropos environments.

We got frustrated with working with so many benchmark frameworks that were outdated or unusable, so we implemented evaluation-only mode into Atropos, our RL environments framework.

So our first port from outside our existing environments was @natolambert's Reward-Bench!

Note: it only supports generative reward models (regular LLM Judges) at the moment.

Check out the PR here:

20,55K

Johtavat

Rankkaus

Suosikit