Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Why can AIs code for 1h but not 10h?

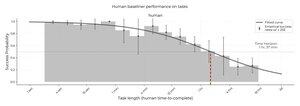

A simple explanation: if there's a 10% chance of error per 10min step (say), the success rate is:

1h: 53%

4h: 8%

10h: 0.002%

@tobyordoxford has tested this 'constant error rate' theory and shown it's a good fit for the data

chance of success declines exponentially

Human's chance of error declines more slowly, so they eventually win over longer horizons.

This could be due to a remaining bastion of human advantage (or just because the human data is averaged over many levels of expertise).

Crucial point: the error rate is halving every ~5 months – at that rate, we reach systems that can do 10h tasks in 1.5 years, and 100h tasks another 1.5 years after that.

1,25M

Johtavat

Rankkaus

Suosikit