Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Jason Wei

ai researcher @openai

Jason Wei kirjasi uudelleen

📣 Excited to share our real-world study of an LLM clinical copilot, a collab between @OpenAI and @PendaHealth.

Across 39,849 live patient visits, clinicians with AI had a 16% relative reduction in diagnostic errors and a 13% reduction in treatment errors vs. those without. 🧵

258,12K

Jason Wei kirjasi uudelleen

This is my lecture from 2 months ago at @Cornell

“How do I increase my output?” One natural answer is "I will just work a few more hours." Working longer can help, but eventually you hit a physical limit.

A better question is, “How do I increase my output without increasing input as much?” That is leverage.

We hear “leverage” so often that its implication is easy to overlook. My personal favorite categorization of leverage is by Naval Ravikant: human labor, capital, and code / media. Each has powered major waves of wealth creation in history.

However, once a leverage source becomes popular (think YouTube channels today versus ten years ago) competition compresses the margin. So when a new leverage appears, it’s a rare chance for outsized gains.

In this talk, I describe AI as that emerging leverage. An AI agent blends labor leverage (it does work for you and is permissionless) with code leverage (you can copy-and-paste it).

It’s cliché to say AI will create massive wealth. But using this leverage lens lets us interpret the noisy AI news cycle in a consistent way and spot the real opportunities.

Thanks @unsojo for hosting me!

402,41K

New blog post about asymmetry of verification and "verifier's law":

Asymmetry of verification–the idea that some tasks are much easier to verify than to solve–is becoming an important idea as we have RL that finally works generally.

Great examples of asymmetry of verification are things like sudoku puzzles, writing the code for a website like instagram, and BrowseComp problems (takes ~100 websites to find the answer, but easy to verify once you have the answer).

Other tasks have near-symmetry of verification, like summing two 900-digit numbers or some data processing scripts. Yet other tasks are much easier to propose feasible solutions for than to verify them (e.g., fact-checking a long essay or stating a new diet like "only eat bison").

An important thing to understand about asymmetry of verification is that you can improve the asymmetry by doing some work beforehand. For example, if you have the answer key to a math problem or if you have test cases for a Leetcode problem. This greatly increases the set of problems with desirable verification asymmetry.

"Verifier's law" states that the ease of training AI to solve a task is proportional to how verifiable the task is. All tasks that are possible to solve and easy to verify will be solved by AI. The ability to train AI to solve a task is proportional to whether the task has the following properties:

1. Objective truth: everyone agrees what good solutions are

2. Fast to verify: any given solution can be verified in a few seconds

3. Scalable to verify: many solutions can be verified simultaneously

4. Low noise: verification is as tightly correlated to the solution quality as possible

5. Continuous reward: it’s easy to rank the goodness of many solutions for a single problem

One obvious instantiation of verifier's law is the fact that most benchmarks proposed in AI are easy to verify and so far have been solved. Notice that virtually all popular benchmarks in the past ten years fit criteria #1-4; benchmarks that don’t meet criteria #1-4 would struggle to become popular.

Why is verifiability so important? The amount of learning in AI that occurs is maximized when the above criteria are satisfied; you can take a lot of gradient steps where each step has a lot of signal. Speed of iteration is critical—it’s the reason that progress in the digital world has been so much faster than progress in the physical world.

AlphaEvolve from Google is one of the greatest examples of leveraging asymmetry of verification. It focuses on setups that fit all the above criteria, and has led to a number of advancements in mathematics and other fields. Different from what we've been doing in AI for the last two decades, it's a new paradigm in that all problems are optimized in a setting where the train set is equivalent to the test set.

Asymmetry of verification is everywhere and it's exciting to consider a world of jagged intelligence where anything we can measure will be solved.

298,78K

straight banger, i read immediately

Kevin Lu10.7. klo 00.01

Why you should stop working on RL research and instead work on product //

The technology that unlocked the big scaling shift in AI is the internet, not transformers

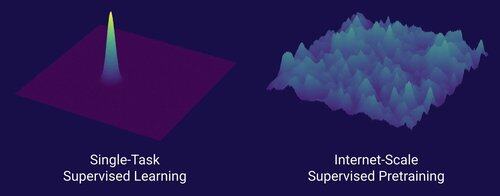

I think it's well known that data is the most important thing in AI, and also that researchers choose not to work on it anyway. ... What does it mean to work on data (in a scalable way)?

The internet provided a rich source of abundant data, that was diverse, provided a natural curriculum, represented the competencies people actually care about, and was an economically viable technology to deploy at scale -- it became the perfect complement to next-token prediction and was the primordial soup for AI to take off.

Without transformers, any number of approaches could have taken off, we could probably have CNNs or state space models at the level of GPT-4.5. But there hasn't been a dramatic improvement in base models since GPT-4. Reasoning models are great in narrow domains, but not as huge of a leap as GPT-4 was in March 2023 (over 2 years ago...)

We have something great with reinforcement learning, but my deep fear is that we will repeat the mistakes of the past (2015-2020 era RL) and do RL research that doesn't matter.

In the way the internet was the dual of supervised pretraining, what will be the dual of RL that will lead to a massive advancement like GPT-1 -> GPT-4? I think it looks like research-product co-design.

18,63K

We don’t have AI self-improves yet, and when we do it will be a game-changer. With more wisdom now compared to the GPT-4 days, it's obvious that it will not be a “fast takeoff”, but rather extremely gradual across many years, probably a decade.

The first thing to know is that self-improvement, i.e., models training themselves, is not binary. Consider the scenario of GPT-5 training GPT-6, which would be incredible. Would GPT-5 suddenly go from not being able to train GPT-6 at all to training it extremely proficiently? Definitely not. The first GPT-6 training runs would probably be extremely inefficient in time and compute compared to human researchers. And only after many trials, would GPT-5 actually be able to train GPT-6 better than humans.

Second, even if a model could train itself, it would not suddenly get better at all domains. There is a gradient of difficulty in how hard it is to improve oneself in various domains. For example, maybe self-improvement only works at first on domains that we already know how to easily fix in post-training, like basic hallucinations or style. Next would be math and coding, which takes more work but has established methods for improving models. And then at the extreme, you can imagine that there are some tasks that are very hard for self-improvement. For example, the ability to speak Tlingit, a native american language spoken by ~500 people. It will be very hard for the model to self-improve on speaking Tlingit as we don’t have ways of solving low resource languages like this yet except collecting more data which would take time. So because of the gradient of difficulty-of-self-improvement, it will not all happen at once.

Finally, maybe this is controversial but ultimately progress in science is bottlenecked by real-world experiments. Some may believe that reading all biology papers would tell us the cure for cancer, or that reading all ML papers and mastering all of math would allow you to train GPT-10 perfectly. If this were the case, then the people who read the most papers and studied the most theory would be the best AI researchers. But what really happened is that AI (and many other fields) became dominated by ruthlessly empirical researchers, which reflects how much progress is based on real-world experiments rather than raw intelligence. So my point is, although a super smart agent might design 2x or even 5x better experiments than our best human researchers, at the end of the day they still have to wait for experiments to run, which would be an acceleration but not a fast takeoff.

In summary there are many bottlenecks for progress, not just raw intelligence or a self-improvement system. AI will solve many domains but each domain has its own rate of progress. And even the highest intelligence will still require experiments in the real world. So it will be an acceleration and not a fast takeoff, thank you for reading my rant

339,86K

I would say that we are undoubtedly at AGI when AI can create a real, living unicorn. And no I don’t mean a $1B company you nerds, I mean a literal pink horse with a spiral horn. A paragon of scientific advancement in genetic engineering and cell programming. The stuff of childhood dreams. Dare I say it will happen in our lifetimes

84,28K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin