Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

RT to help Simon raise awareness of prompt injection attacks in LLMs.

Feels a bit like the wild west of early computing, with computer viruses (now = malicious prompts hiding in web data/tools), and not well developed defenses (antivirus, or a lot more developed kernel/user space security paradigm where e.g. an agent is given very specific action types instead of the ability to run arbitrary bash scripts).

Conflicted because I want to be an early adopter of LLM agents in my personal computing but the wild west of possibility is holding me back.

16.6.2025

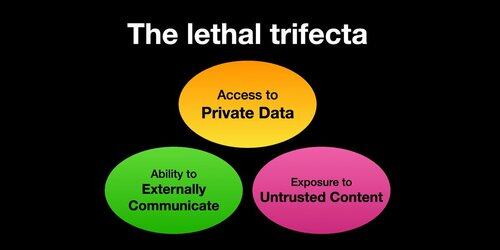

If you use "AI agents" (LLMs that call tools) you need to be aware of the Lethal Trifecta

Any time you combine access to private data with exposure to untrusted content and the ability to externally communicate an attacker can trick the system into stealing your data!

I should clarify that the risk is highest if you're running local LLM agents (e.g. Cursor, Claude Code, etc.).

If you're just talking to an LLM on a website (e.g. ChatGPT), the risk is much lower *unless* you start turning on Connectors. For example I just saw ChatGPT is adding MCP support. This will combine especially poorly with all the recently added memory features - e.g. imagine ChatGPT telling everything it knows about you to some attacker on the internet just because you checked the wrong box in the Connectors settings.

386K

Johtavat

Rankkaus

Suosikit