Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

"You hear him, he is still talking non-stop, but this time, we can finally confirm what he said."

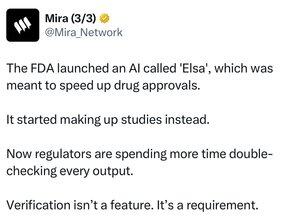

Mira @Mira_Network may have never thought about making AI more human-like; it just wants AI to leave verifiable evidence with every statement it makes.

Let the strongest "black box" of this era become the most transparent existence on the chain.

____ 🁢🁢🁢 ____🁢🁢🁢 ____ 🁢🁢🁢 ____ 🁢🁢🁢 ____ 🁢🁢🁢 ____

Mira @Mira_Network: Put brakes on the AI that is speeding, instead of giving it a pair of wings.

AI can talk, can write code, can simulate emotions, but can it really be trusted? This is what Mira @Mira_Network aims to solve.

Mira's answer is clear: it's not about how human-like it sounds, but whether it can leave behind a "this was said" that is worth pondering for everyone.

This is precisely the biggest divergence between Mira @Mira_Network and the vast majority of AI projects; it does not chase after showing how "smart" it is, but rather goes against the grain, deciding to build a "trustworthy" foundation.

It does not trust the surface of any model, but instead embeds verifiable traces for every action, every response, and every judgment of AI in the underlying architecture.

This is not about optimizing the experience, but about defining the "answer."

____ 🁢🁢🁢 ____🁢🁢🁢 ____ 🁢🁢🁢 ____ 🁢🁢🁢 ____ 🁢🁢🁢 ____

AI is always a tool, not a subject.

Perhaps this statement carries my subjective bias, but it is also the underlying order that Mira @Mira_Network wants to clarify anew.

AI is not your boss, not your colleague, but a handy tool in your hand, more like a sword used by a warrior to win battles; the sharper it is, the more it needs to be held in a controllable hand.

It can be efficient, self-consistent, and anthropomorphic, but ultimately it must be supervised, must sign on the chain, must leave evidence, and must accept verification. This is not an optimization of the experiential layer, but a structural constraint that does not allow AI to grow autonomously or break away from rules; rather, it forces it to act within the framework of human authority.

This is precisely the design origin of Mira @Mira_Network: to normalize verification and make supervision a part of the system itself. You may not need to understand the details of its model, nor be proficient in its graph structure, but you must realize that without a verification mechanism, everything AI says is equivalent to saying nothing; you cannot discern truth from falsehood, nor distinguish right from wrong.

____ 🁢🁢🁢 ____🁢🁢🁢 ____ 🁢🁢🁢 ____ 🁢🁢🁢 ____ 🁢🁢🁢 ____

If you agree that AI is a technological tool that needs to be restricted, proven, and disciplined, then it will be hard to bypass Mira @Mira_Network.

Because in its world, AI can always speak, but it must also bear responsibility, withstand repeated verification, and ultimately present a trustworthy statement before you.

11,48K

Johtavat

Rankkaus

Suosikit