Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

宝玉

Prompt Engineer, dedicated to learning and disseminating knowledge about AI, software engineering, and engineering management.

Today, there’s a hot news story on Hacker News about California's unemployment rate rising to 5.5%, the lowest in the nation, with the tech industry struggling: "The job market is too brutal."

> According to data released by the state government on Friday, California's unemployment rate rose to 5.5% in July, ranking first among all states in the U.S. This is attributed to the ongoing weakness in the tech industry and other office jobs, along with a sluggish hiring market.

The news attributes this to the weakness in the tech industry, as it plays a crucial role in California's economy. The discussion in the Hacker News community has been intense, with people analyzing the deeper reasons from their perspectives, which are far more complex than what the headline suggests.

I think the discussion above summarizes well why the tech industry is currently experiencing low employment.

1. The core point is: the multiple aftereffects of saying goodbye to the "zero interest rate era"

This is the most mainstream and profound viewpoint in the discussion. Many believe that the current predicament of the tech industry is not caused by a single factor, but rather a chain reaction triggered by the end of the "zero interest rate policy" (ZIRP) era over the past decade.

- Capital bubble burst: From around 2012 to 2022, extremely low interest rates made capital exceptionally cheap. A large amount of venture capital (VC) flooded into the tech industry, giving rise to countless business models that relied on "burning money" for growth, especially those cryptocurrencies (Crypto) and metaverse companies that lacked real value. With the Federal Reserve raising interest rates, the era of cheap money ended, leading to a break in the funding chains of these companies, resulting in massive layoffs and bankruptcies.

- Talent supply-demand imbalance: During the ZIRP era, the myth of high salaries in the tech industry attracted a large influx of talent. University computer science (CS) programs expanded significantly, coding boot camps proliferated, and coupled with tech immigration, the supply of software engineers surged dramatically over the decade. However, as capital retreated, the demand side (especially startups) shrank sharply, leading to a severe oversupply of talent.

- Spillover effects on industries like biotech: Industries like biotechnology (Biotech), which also rely on long-term, high-risk investments, have also been severely impacted. These industries are even more dependent on cheap capital than the software industry. After ZIRP ended, VC funding gradually dried up, and startups, having exhausted their "runway" funds, could not secure new rounds of financing and had to lay off employees or shut down.

> (by tqi): "In my view, it’s too early to say that 'AI' has a substantial impact on hiring in software companies. A more reasonable explanation is that between 2012 and 2022, the supply of software engineers surged... Meanwhile, on the demand side, the VC funds during the zero interest rate era mainly went to those nonsensical cryptocurrencies and metaverse companies, most of which failed, leading to a lack of later-stage or newly listed companies that could absorb this talent."

2. The "double-edged sword" of remote work: A new wave of globalization outsourcing

The COVID-19 pandemic popularized remote work (Work From Home, WFH), which was seen as a boon by many developers at the time, but now, its negative effects are beginning to surface.

- Paving the way for outsourcing: When developers fought hard for the right to work fully remotely, they may not have realized that this also opened the door for companies to outsource jobs to countries with lower costs. Since everyone is remote, why not hire an Indian or Eastern European engineer who is just as good but only earns 1/5 of what a U.S. engineer makes?

- The "no turning back" office: Some commentators believe that the "Return to Office" (RTO) policies pushed by tech companies are, to some extent, aimed at protecting local jobs. Once it is proven that work can be done 100% remotely, it can be done from anywhere in the world, and the salary advantage of U.S. engineers will no longer exist.

- Debate over outsourcing quality: Others argue that outsourcing has been ongoing for decades, and high-quality software development still requires top local talent due to issues like communication costs, time zone differences, and cultural backgrounds. However, supporters of outsourcing believe that as remote collaboration tools mature and management models improve, these barriers are gradually being overcome.

> (by aurareturn): "I’ve been saying on HN since 2022: all North American developers supporting fully remote work will be shocked when your company decides to replace you with overseas workers. Since it’s all remote, why would a company pay you five times more instead of a harder-working, less complaining overseas employee?... Supporting the return to the office may, in the long run, save your career."

3. The role of AI: A productivity tool, a layoff excuse, or a capital "vampire"?

The role of artificial intelligence (AI) in this wave of unemployment presents complex divisions in the discussion.

- Limited direct substitution effect: Most people agree that current AI cannot fully replace experienced software engineers. However, it has begun to replace some junior, repetitive tasks, such as minor consulting tasks. Some consultants have reported that clients no longer contact them because they can use ChatGPT to solve minor bugs.

- The "perfect excuse" for layoffs: A common viewpoint is that AI has become the "perfect excuse" for companies to lay off employees and cut costs. Even if the fundamental reason for layoffs is economic downturn or management decisions, companies are happy to package it as a strategic adjustment of "embracing AI and improving efficiency."

- The "black hole" of capital: AI plays another key role—it siphons off the remaining venture capital that could have flowed into other tech fields. VCs are now almost exclusively interested in AI projects, exacerbating the financing difficulties for startups in non-AI sectors.

4. The "rust belt" of the tech industry? Structural concerns for the future

Some discussants express concerns about the future from a more macro perspective, comparing the tech industry to the once-glorious but now declining manufacturing "rust belt."

- Repetition of job losses: Just as the U.S. outsourced manufacturing to China, IT and software development jobs are now massively shifting to India, Latin America, and Eastern Europe. This could lead to long-term structural unemployment for the once high-paying software engineer group.

- Political and social impacts: If a large number of middle-class tech jobs disappear, it could trigger new social and political issues, just as the decline of the "rust belt" continues to influence the political landscape in the U.S.

- Controversy over immigration and visa policies (H1B/O1): Some discussions point fingers at work visas like H1B, arguing that they are abused, driving down local engineers' salaries and intensifying competition. Others staunchly defend tech immigration, believing that it is these top talents from around the world (like graduates from the University of Waterloo) that form the cornerstone of innovation in Silicon Valley.

5. Company management and cultural changes: The "Musk effect"

An interesting viewpoint suggests that Musk's massive layoffs at Twitter (now X) have created a demonstration effect.

- Rationalization of layoffs: When Musk laid off over 75% of Twitter's employees, the product continued to operate, prompting many CEOs to reflect: "If he can do it, why can’t I?" This broke the past mindset of tech companies that "more talent is better," making large-scale layoffs psychologically and commercially easier to accept.

6. Political and policy factors: Controversies over tax law changes

A technical but far-reaching clue is about changes in U.S. tax law.

- R&D expense amortization rules (Section 174): A provision in the 2017 tax reform bill (TCJA) by the Trump administration requires companies to amortize software development salaries and other R&D expenses over five years starting in 2022, rather than deducting them in full in the year incurred as before. This greatly increases the tax burden on tech companies (especially startups) and suppresses their willingness to hire domestically.

- Recent legislation's remedial effect: The recently passed "Build Back Better" (BBB) act partially corrected this issue, allowing domestic R&D expenses to be deducted immediately again. Some commentators believe they felt a warming in the hiring market around July, which may be related to this.

In conclusion

From these discussions, it appears that the reasons for the current low employment in California's tech industry are quite complex and not caused by a single factor. It cannot simply be attributed to "AI replacing humans" or "cyclical industry downturns," but rather a result of the economic reckoning following the end of the zero interest rate era, the restructuring of the global labor market brought about by remote work, the dual impact of AI as a new technology and capital magnet, and changes in specific tax policies, among other factors.

I wonder when we will be able to emerge from this predicament? Or perhaps the reasons are not just those discussed above.

58,77K

Translation: Why Large Language Models Can't Truly Build Software

Author: Conrad Irwin

One thing I've spent a lot of time doing is interviewing software engineers. This is obviously a daunting task, and I wouldn't say I have any special tricks; but this experience has indeed given me time to reflect on what an efficient software engineer actually does.

The Core Loop of Software Engineering

When you observe a true expert, you'll find that they are always cycling through the following steps:

* Building a mental model of the requirements.

* Writing (hopefully?!) code that meets those requirements.

* Building a mental model of the actual behavior of the code.

* Identifying the differences between the two and then updating the code (or the requirements).

There are many ways to complete these steps, but what sets efficient engineers apart is their ability to build and maintain clear mental models.

How Do Large Language Models Perform?

To be fair, large language models are quite good at writing code. When you point out where the problem lies, they also do a decent job of updating the code. They can do all the things that real engineers do: read code, write and run tests, add logging, and (probably) use a debugger.

But what they can't do is maintain a clear mental model.

Large language models can get caught in endless confusion: they assume that the code they write actually works; when tests fail, they can only guess whether to fix the code or the tests; when they feel frustrated, they simply delete everything and start over.

This is the exact opposite of the qualities I expect in an engineer.

Software engineers test as they work. When tests fail, they can refer to their mental model to decide whether to fix the code or the tests, or to gather more information before making a decision. When they feel frustrated, they can seek help by communicating with others. Although they sometimes delete everything and start over, that choice comes after they have a clearer understanding of the problem.

But it will be fine soon, right?

Will this change as models become more capable? Maybe?? But I think it requires a fundamental change in how models are built and optimized. The models needed for software engineering are not just those that can generate code.

When a person encounters a problem, they can temporarily set aside all context, focus on solving the immediate issue, and then return to their previous thoughts and the larger problem at hand. They can also switch fluidly between the macro big picture and micro details, temporarily ignoring details to focus on the whole, and dive deep into specifics when necessary. We won't become more efficient just by cramming more words into our "context window"; that will only drive us crazy.

Even if we can handle vast amounts of context, we know that current generative models have several serious issues that directly affect their ability to maintain clear mental models:

* Context omission: Models are not good at identifying omitted contextual information.

* Recency bias: They are severely affected by recency bias when processing context windows.

* Hallucination: They often "hallucinate" details that shouldn't exist.

These issues may not be insurmountable; researchers are working to add memory to models so they can employ similar thinking techniques as we do. But unfortunately, as it stands, they (beyond a certain level of complexity) cannot truly understand what is happening.

They cannot build software because they cannot maintain two similar "mental models" simultaneously, identify the differences, and decide whether to update the code or the requirements.

So, what now?

Clearly, large language models are useful for software engineers. They can quickly generate code and excel at integrating requirements and documentation. For certain tasks, this is sufficient: the requirements are clear enough, and the problems are simple enough that they can be solved in one go.

That said, for any task with a bit of complexity, they cannot maintain enough context accurately to iteratively produce a viable solution. You, as a software engineer, still need to ensure that the requirements are clear and that the code truly implements the functionality it claims.

At Zed, we believe that in the future, humans and AI agents can collaboratively build software. However, we firmly believe (at least for now) that you are the driver holding the steering wheel, while large language models are just another tool at your disposal.

62,82K

宝玉 kirjasi uudelleen

Yesterday I spoke at the CSDN Product Manager Conference.

Three months ago, when my friends at CSDN invited me to give a talk at the Product Manager Conference, I actually wanted to decline.

The reason was that my startup had only been established for six months, and I didn't have much valuable insight to share with everyone.

However, my friends at CSDN said it was okay, the conference was still three months away, and I could also share some previous product experiences.

As luck would have it, after we launched FlowSpeech last week, the product received rave reviews, our MRR tripled, and our ARR also surpassed a small target. More importantly, our users made real money by using the product, which is the best proof of our product's strength.

So yesterday during my speech, I joked with everyone that the value of this PPT has skyrocketed, so please listen carefully.

46,28K

"As a farmer, I only buy organic food; as an AI practitioner, I only look at non-AI-generated content" 😅

马东锡 NLP 🇸🇪15.8. klo 16.12

I don't know about you, but as an AI practitioner, I instinctively reject everything generated by AI.

When reviewing code, if I feel it's written by AI, I just write LGTM.

When reading articles, as soon as I detect it's AI-generated, I immediately close it.

If a website's UI looks like it's generated by AI, I close it right away.

If a podcast is AI-generated, I turn it off immediately.

If a short video is generated by AI, I swipe it away and switch to videos of used car dealers or repairing donkey hooves.

I think letting AI-generated content fool my dopamine and endorphins is irresponsible to my body and mind.

AI-generated content certainly has value, but it's limited to being an intermediary between human input and output; it should not and cannot circulate as a final form for a long time.

62,44K

Vibe Coding is a misleading term; its main significance lies in using AI for prototype development, which can help quickly determine product requirements. In software engineering, the code for prototype development is usually disposable, and when formally developing a product, it requires a complete system redesign, followed by recoding and implementation. The outcome of Vibe Coding is similar; after finalizing the requirements, it still necessitates redesigning and redeveloping.

铁锤人14.8. klo 21.07

Many people probably don't know what vibe coding is.

This term was created by AI guru Andre Karpathy,

when you describe a problem to the AI, and then it writes the code itself.

It's only suitable for simple weekend projects that only you will use.

Because these are unimportant projects, it can act on instinct without needing to actually plan or test.

👇 This is where the dream begins.

48,08K

In contextual engineering, the agent needs to interact with tools and the environment to obtain data to complete the context.

dontbesilent14.8. klo 05.13

I suddenly understood what Claude Code and Comet are all about, and why agents appear in both the CLI and the browser, becoming the mainstream choice.

The location of the agent is very important!

Developers like to use Claude Code because their code can be controlled through the CLI; the code is essentially the context for communicating with the large model. With code, I can say less, and the agent's work directly rewrites the files on my computer.

However, after messing around today, I found that people in the media industry can't use it because they have nothing on their computers; the core content is all in apps and browsers.

But Claude Code is difficult to access the content in browsers and apps, so the core issue isn't whether I use Sonnet or Opus, but rather that this agent shouldn't appear in the command line.

This agent should appear in the browser! For example, the Coze workflow that Douyin has been promoting for scraping data from Xiaohongshu can be done directly with Comet.

For people in the media industry, Comet is the real Claude Code because the agent for media creators must appear in the browser.

Compared to the previous Dia browser, it seems quite foolish; that wasn't an agent, it was an LLM.

If you just insert an LLM into the browser, I think it’s almost meaningless.

15,11K

Self-Study Computer Science Tutorial TeachYourselfCS

If you are a self-taught engineer or have graduated from a programming bootcamp, it is essential for you to learn computer science. Fortunately, you don't have to spend years and a significant amount of money pursuing a degree: you can achieve a world-class education just by relying on yourself 💸.

There are many learning resources available on the internet, but there is a mix of quality. What you need is not a list like "200+ Free Online Courses," but answers to the following questions:

What subjects should you learn, and why?

What are the best books or video courses for these subjects?

In this guide, we attempt to provide definitive answers to these questions.

Deedy14.8. klo 09.59

"Teach Yourself Computer Science" is the best resource to learn CS.

2 weeks into vibe coding and non-technical people feel the pain. "I really wish I was technical. I just don't know how to proceed."

It takes ~1000hrs across 9 topics to understand CS with any depth.

152,08K

宝玉 kirjasi uudelleen

Here comes the friends~ I made only 20 bucks with 3 million users: The false prosperity of AI tools - ListenHub

Telling jokes, who can't do that? Many people were very interested in the case I shared on Hardland Hacker before, so I specifically used @oran_ge's ListenHub to create a joke podcast. You can come and listen~

29,23K

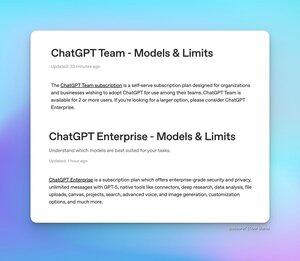

Many friends are concerned about the usage limits of the ChatGPT Team version and Enterprise version. The official team has now released two new FAQ articles to explain this.

* ChatGPT Team version - There are no usage limits for GPT-5 and GPT-4o, but there are the following limits for different model versions:

* 200 GPT-5 Thinking requests per day

* 2800 GPT-5 Thinking mini requests per week

* 15 GPT-5 Pro requests per month

* 500 GPT-4.1 requests every 3 hours

* 300 o4-mini and o3 requests per day

* ChatGPT Enterprise version - There are no usage limits for GPT-5, GPT-4o, and GPT-4.1-mini, but there are the following limits for different model versions:

* 200 GPT-5 Thinking requests per week

* 15 GPT-5 Pro requests per month

* 20 GPT-4.5 requests per week

* 500 GPT-4.1 requests every 3 hours

* 300 o4-mini requests per day

* 100 o4-mini-high requests per day

* 100 o3 requests per week

* 15 o3-pro requests per month

The FAQ article also mentions that the current limits for the GPT-5 Thinking model are temporary and are actually higher than the long-term limits listed above.

Tibor Blaho14.8. klo 03.17

For everyone asking about limits of ChatGPT Team & Enterprise - there are 2 new FAQ articles

- ChatGPT Team - unlimited GPT-5 and GPT-4o, 200 GPT-5 Thinking requests/day, 2800 GPT-5 Thinking mini requests/week, 15 GPT-5 Pro requests/month, 500 GPT-4.1 requests/3 hours, 300 o4-mini and o3 requests/day

- ChatGPT Enterprise - unlimited GPT-5, GPT-4o and GPT-4.1-mini, 200 GPT-5 Thinking requests/week, 15 GPT-5 Pro requests/month, 20 GPT-4.5 requests/week, 500 GPT-4.1 requests/3 hours, 300 o4-mini requests/day, 100 o4-mini-high requests/day, 100 o3 requests/week, 15 o3-pro requests/month

The FAQ article mentions that GPT-5 Thinking limits are temporarily higher than the long-term rates shown above

15,53K

This point is indeed true: instead of saying what not to do in the prompt, it should focus on what should be done. Large models are similar to humans in this regard; the more you tell them not to do something, the more likely they are to be drawn to it.

素人极客-Amateur Geek13.8. klo 23.50

When you want the model to be prohibited or not,

do your best not to write it directly!!!

do your best not to write it directly!!!

do your best not to write it directly!!!

Let me briefly explain a few methods:

1. If you really have to write, do not exceed two points.

2. Change "do not" to "do". Avoid writing awkward sentences—check each sentence to ensure the context, transitions, and connections are coherent.

3. Prohibited content should go from not appearing to appearing multiple times. Some things cannot be remembered after just one mention. When I was in school, my Japanese language teacher said that Japanese companies have a special practice of repeatedly explaining a simple matter to prevent you from forgetting it; even the smallest details will be remembered if mentioned multiple times. You can prohibit at the beginning, in the middle, in related areas, and at the end as well.

4. If prohibition means not to do something, then add a step: turn a single task into two steps, and at the end add a sentence asking if there are any prohibitions. I will send you the prohibitions, and then we will start screening the prohibitions, ensuring that other information remains unchanged during partial modifications.

5. Place the prohibitions in the first step.

6. Ensure that your prohibitions can actually be enforced. For example, if you haven't defined the style of the text, and the text ends up sounding AI-generated, then prohibiting it from using an AI tone is useless; it doesn't even know what tone it's using!

63,88K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin