Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

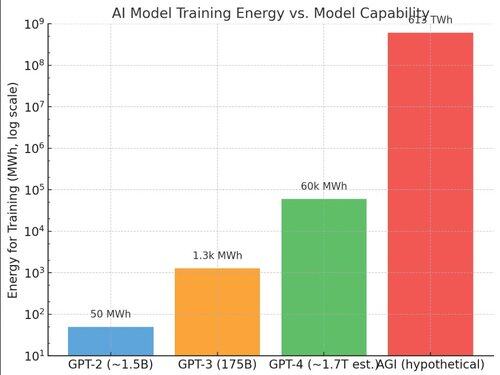

How much Energy to Train AGI? ☀️🔋

- Current record: GPT‑4’s full training run ≈ 55 GWh

- AGI‑class system (≈ 100 million H100‑equivalent GPUs) projected annual training power draw ≈ 613,000 GWh

- Four orders of magnitude difference

- 2031-2035 is best estimate

Energy is a core dependency to AGI

Caveats

1/ AI could lead to energy breakthroughs. This is recursive self improvement in a different form since its improving the underlying training making timelines shorter.

2/ Inference will be a huge demand for GPUs. Training doesn't get all GPUs. This will make timelines longer.

H/T great convos with @KSimback

Link to ChatGPT Question:

1,16K

Johtavat

Rankkaus

Suosikit