Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Can AI agents assess the reproducibility of research findings?

Our #ACL2025 paper shows that they fall short with REPRO-Bench, a new benchmark that evaluates agents on real-world social science reproducibility tasks of 112 papers, full PDFs, code, and data. Our highest performing agent scores <40%!

1/6

Links here and thread below:

Paper:

Code:

Substack:

Medium:

2/6

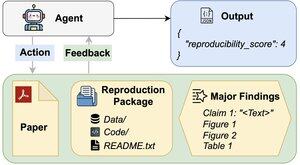

REPRO-Bench consists of 112 task instances, each built from real-world reproducibility efforts sourced from mass reproduction projects, I4R, Retraction Watch, and reproducibility attempts posted on Twitter/X. Each task includes a paper PDF, reproduction code & data, and a list of major findings.

3/6

We evaluate 3 popular agents on REPRO-Bench. The best-performing agent, CORE-Agent, achieves only 21.4% accuracy, which is lower than random guessing (25%).

4/6

We introduce REPRO-Agent, an improved agent based on our failure analysis. With just four additional instructions, REPRO-Agent boosts accuracy to 36.6%, a 71% relative improvement over the CORE-Agent, while maintaining comparable cost.

5/6

This work is joint with @ChuxuanHu, Austin Peters, and others.

6/6

6,35K

Johtavat

Rankkaus

Suosikit