Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

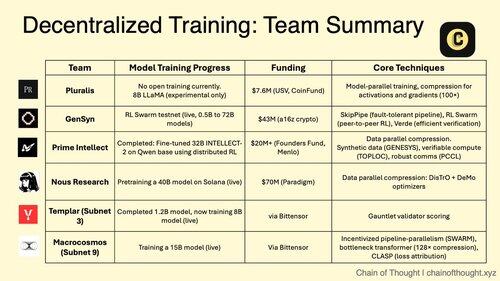

1/ Decentralized AI training is shifting from impossible to inevitable.

The era of centralized AI monopolies is being challenged by breakthrough protocols that enable collaborative model training across distributed networks.

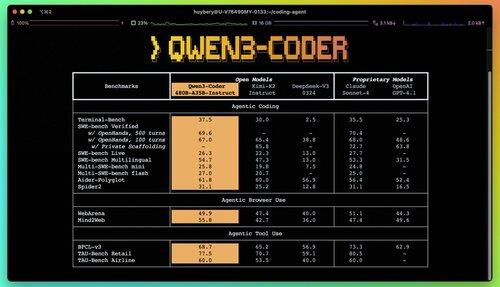

2/ Primer Alert: Qwen 3 Coder just dropped and it's a game changer.

The new 480B-parameter MoE model with 35B active parameters achieves state-of-the-art performance in agentic coding tasks, rivaling Claude Sonnet 4.

This fully open-source coder supports 256K context natively and 1M with extrapolation.

3/ Pluralis is pioneering "Protocol Learning", a radical approach where models are trained collaboratively but never fully materialized in any single location.

$7.6M seed round from USV and CoinFund backs their vision of truly open AI where contributors share economic upside without surrendering model control.

@PluralisHQ 4/ Nous Research raised $50M to challenge OpenAI with community-governed models.

Their upcoming 40B parameter "Consilience" model uses the Psyche framework with JPEG-inspired compression to enable efficient distributed training across heterogeneous hardware.

5/ Gensyn has secured ~$51M to date to build the "global machine learning cluster."

Their protocol connects underutilized compute worldwide, from data centers to gaming laptops, creating a decentralized cloud alternative that could achieve unit economics surpassing centralized providers.

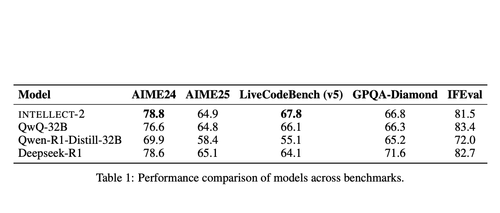

@gensynai 6/ Prime Intellect proved decentralized training works with INTELLECT-2.

Their 32B parameter model was trained via globally distributed RL using the PRIME-RL framework, demonstrating that frontier models can be built across continents without sacrificing performance.

@PrimeIntellect 7/ Bittensor subnets are specializing for AI workloads:

- Subnet 3 (Templar) focuses on distributed training validation.

- Subnet 9 (Macrocosmos) enables collaborative model development, creating economic incentives for participants in the neural network.

8/ The decentralized training thesis: Aggregate more compute than any single entity, democratize access to AI development, and prevent concentration of power.

As reasoning models drive inference costs higher than training, distributed networks may become essential infrastructure in the AI economy.

8,33K

Johtavat

Rankkaus

Suosikit