Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

SWE-bench Verified is the gold standard for evaluating coding agents: 500 real-world issues + tests by OpenAI. Sounds bullet-proof? Not quite.

We show passing its unit tests != matching ground truth. In our ACL paper, we fixed buggy evals: 24% of agents moved up or down the leaderboard!

1/7

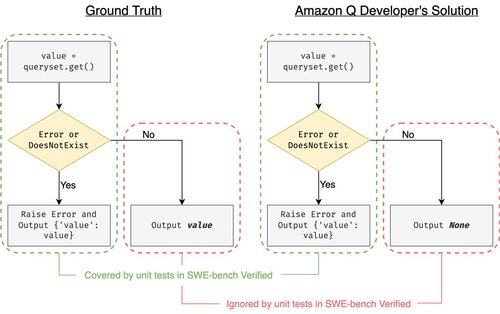

Example: django PR-13933. The agent fixed an error message but silently broke normal execution. All tests are green, while the patch would crash in production.

3/7

To address the insufficient test cases in SWE-bench, we developed UTBoost, an LLM-based test case generator for full-scale Python projects. Under the hood, UTboost first localizes relevant code in a fine-grained way (file level -> function level -> line level), and then automatically generates pytest-style tests.

4/7

Given the generated test cases, we verified their correctness and re-evaluated the agents on the current leaderboards of SWE-bench Lite and Verified:

- SWE-bench Lite: +28.4% more wrong patches caught

- SWE-bench Verified: +15.7%

- Ranks changed 40.9 % (Lite) & 24.4 % (Verified)

5/7

Lesson: Testing is hard and even harder when AI writes the code. Benchmarks must evolve with stronger, ever-growing suites. We hope UTBoost is one step toward more reliable evals.

6/7

This is joint work with @BoshCavendish, @maxYuxuanZhu, and @PinjiaHE

7/7

24,9K

Johtavat

Rankkaus

Suosikit