Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

so here's a question:

i was thinking about the structure of english and how it might affect the learning of positional embeddings.

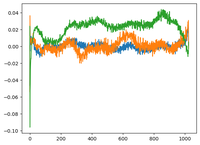

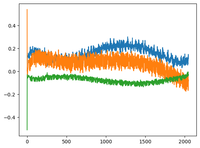

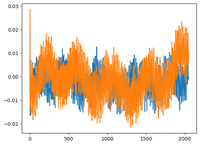

i went back to @karpathy's GPT2 video and he plotted the wpe matrix for GPT2 where the plot is basically the values of 3 specific channels (out of the 768 dimensions) as a function of the position (1024, context size).

he said that the learned pos_embeddings have a structure in them. i got curious and plotted the same for 2 more open-source models: EleutherAI/gpt-neo-125M, and facebook/opt-125m, and i got the same result (i guess?).

in the original transformers paper, the authors used a fixed sinusoidal function for positional embeddings. why is it the case that the models learn sinusoidal structure in natural language?

is it because english has a sinusoidal structure? subjects usually precede verbs, clauses have temporal or causal order, etc.?

93,01K

Johtavat

Rankkaus

Suosikit